In this post we learn about what is cache memory and types of cache memory.

Table of Contents

what is cache memory

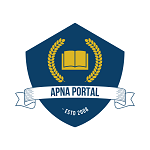

Cache memory is a Static RAM which can be accessed easily and quickly by the processor. Cache memory stores the data that is used more frequently. Thus, the cache memory is the first place the processor checks when it needs to access data. It is not essential to access the main memory, which could take longer, if the data is already in the cache memory and can be quickly retrieved from there. The cache memory can be illustrated as follows.

what is cache memory concept Example.

Consider a telephone directory which tele-phone numbers of all the users in a city. When a person wants to call any of the telephone users, then he looks in to the telephone directory, searches for the particular user and then gets the telephone number. Subsequently, the individual records the number in his phone book.

The individual quickly looks up the phone number of the person he wants to call the next time in the telephone index book rather than taking the time to look up the number in the telephone directory. The time spent to get the number in the index book is less compared to the time spent to search and get the number from the big telephone directory.

The individual attempting to make a call is likened to a processor, the large phone book to the RAM, and the small phone index book to the cache memory. The processor stores the more frequently used data on to the cache memory and hence the accessing speed can be improved. Further, SRAM is used to create cache memory, which speeds up access. Cache memory is costly and has a finite capacity. The speed of access is displayed in the hierarchy list below. We are traveling at a slower speed as we descend.

- Registers

- L1 Cache

- L2 Cache

- RAM

- Disk Cache

- Disk

- Optical Storage

- Tape

Level 1 Cache Memory

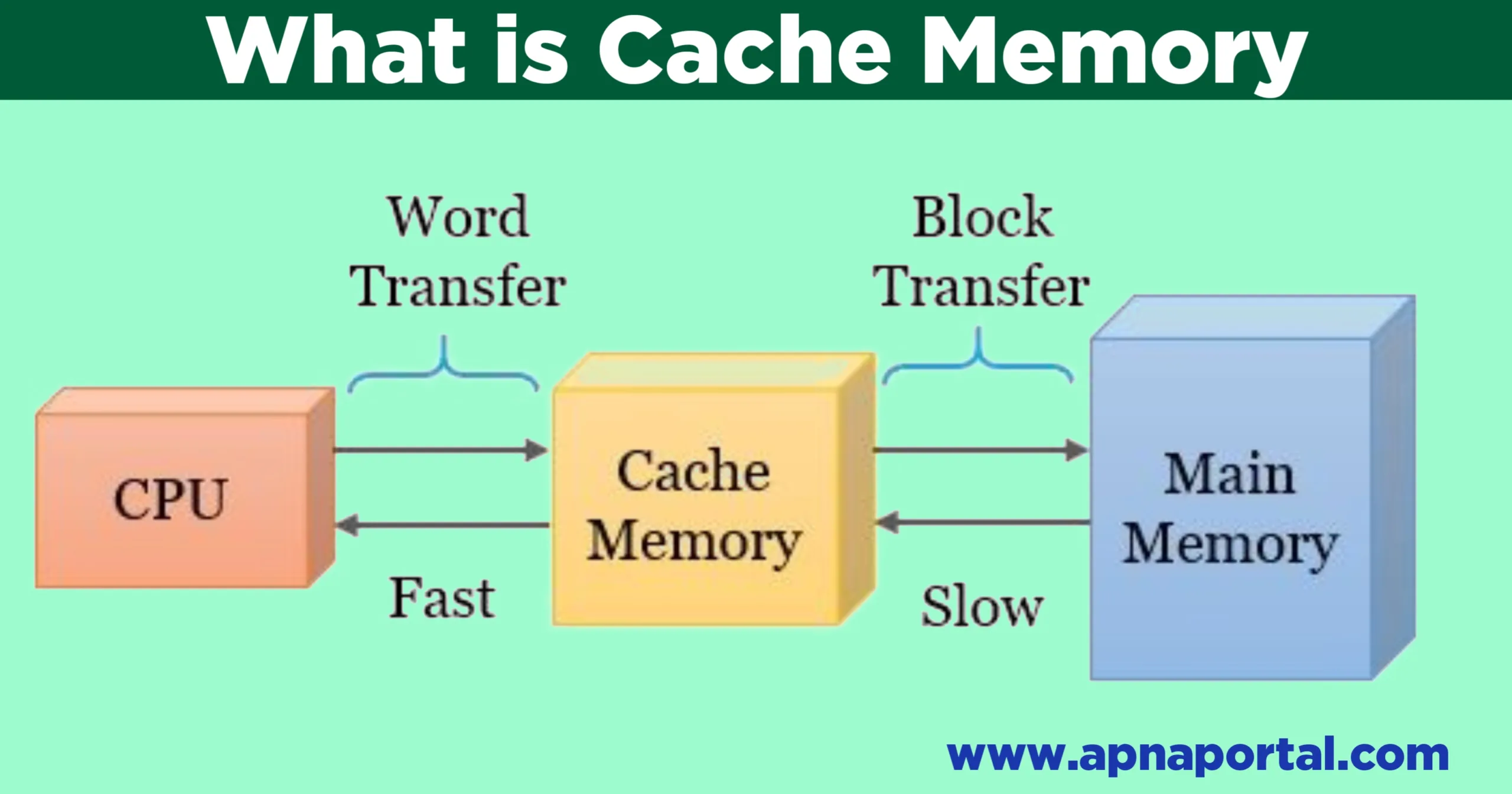

Primary cache, commonly known as level 1 cache memory (LI Cache), is integrated into microprocessors. While older Pentium processors featured a 16 KB built-in cache known as the LI cache, the 80486 had an 8 KB cache. Since the CPU can readily access Level I cache, it is the fastest cache memory.

The number of data transfers between the registers and the L1 cache is depicted in the figure below with numerous arrow marks, indicating the fastest data transfer. As seen in the figure below, the LI cache has a smaller capacity than the RAM or L2 cache. The speed of data transfer between the L2 cache and the LI cache is comparatively low which is given by less arrow marks in the figure below. L2 cache, however, has a larger capacity than LI cache. The speed of data transfer between the RAM and the L2 cache is still less but the storage capacity of RAM is greater than L1 cache or L2 cache.

Level 2 Cache Memory

Level 2 memory Known by another name, secondary cache, level 2 cache memory is located on the motherboard as a separate chip. Greater cache memory is the primary distinction between Pentium and Celeron processors. Celeron processor is slower than Pentium because Celeron has 128 KB L2 cache built in to the chipset whereas Pentium II and Pentium Ill has 512 KB L2 cache memory. Hence Celeron is cheaper and slower whereas Pentium is costly and faster.

Level 3 Cache Memory

With the advancement in technology, many processors included L2 cache with their architecture. Level 3 cache is an extra cache memory which is an interface between the main memory and the processor.

Disk cache

Disk cache enhances the performance of a system by reducing the time taken to read /write to a hard disk. Now days, disk cache is a portion of hard disk space. The disk cache can also be a portion of RAM. The disk cache is used to store the recently used data. The adjacent data which is likely to be accessed next can also be placed in the disk cache which enhances the performance. Hard disk cache is more expensive but is effective and hence is available in small capacity.

what is Cache memory hit and Cache Miss

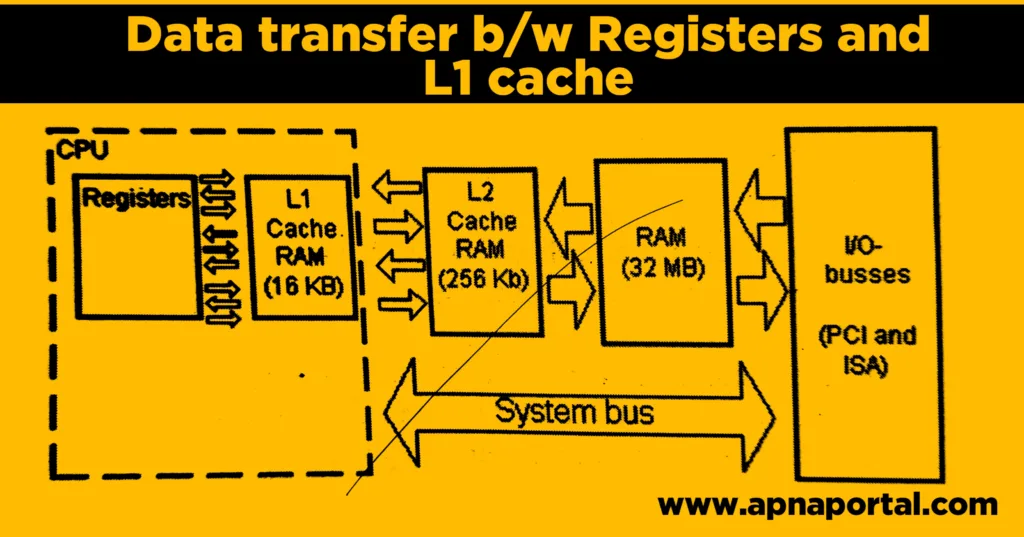

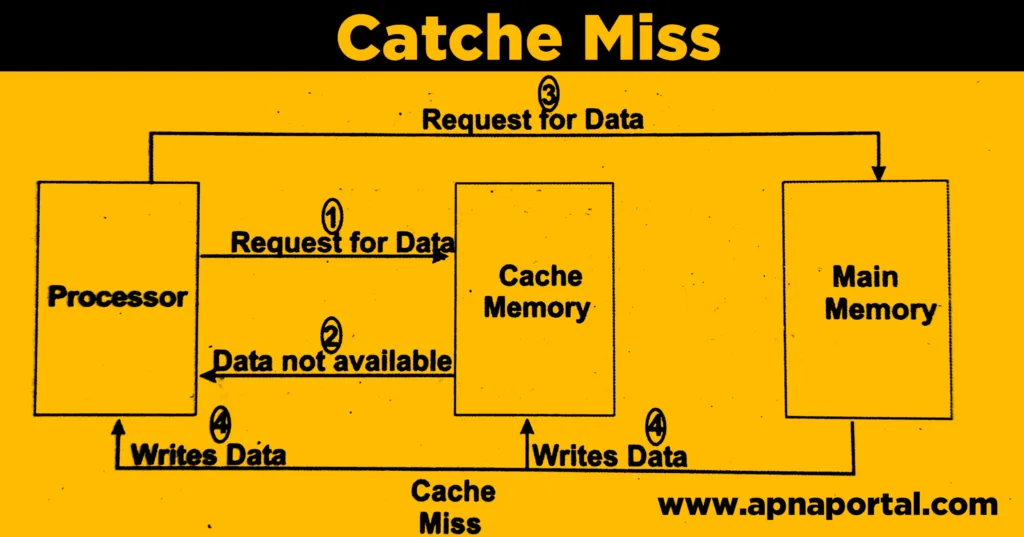

When the processor makes a request to read from memory, the processor first looks in to the cache memory. If the data is present in the cache memory, then the processor gets the data from the cache memory and is called a cache hit. If the data is not available in the cache memory, the processor will have to access the main memory to fetch the data.

The data is then written to cache memory so that it can retrieved from cache memory when the same data is accessed for the next time. If the data is not available in cache memory then it is termed as cache miss. For better performance cache hit/cache miss ratio should be high. The cache hit and cache miss are explained diagrammatically as follows.

The processor access the cache memory for data. If the data is not available in the cache memory, then the data is accessed from the main memory. The data is then written back to the cache memory

If the data is available in the cache memory then it is accessed from the cache memory. The processing is per- formed on the data available in the cache memory and the result is also stored in cache memory. At this instant. there is no synchronization between the data present in the cache memory and the main memory. To synchronization data between cache memory and main memory it is essential to update the main memory. There are two ways to go about performing this. They are

- Write through

- Write Back

Write back cache

In this technique, the modifications done to the cache memory are updated to its source only if it is needed. This technique is used by many processors which includes the Intel processors since 80486. The modifications done to the data stored in cache memory (L1 Cache) are copied to the main memory only if it is necessary and is done immediately.

Write Through Policy

In Write through policy the cache operations are performed parallely. The data modifications are done in the cache memory as well as the main memory are performed simultaneously. Write through policy reduces the risk since the contents are updated immediately and there is no loss of data. But, it is needed to perform the data write operation many number of times. The write back policy reduces the number of data write operations and provides better performance at the risk of data loss in case of any system crash.

What is Cache Memory Advantages

Faster Data Access: Cache memory is much faster than main memory (RAM). It allows the CPU to access frequently used instructions and data quickly, reducing the time it takes to fetch information from the slower main memory.

Improved System Performance: By storing frequently accessed data and instructions in cache, the overall system performance is enhanced. This is especially beneficial for applications that repeatedly use the same set of instructions or data.

Reduced Memory Latency: Cache memory reduces the latency associated with accessing data from main memory. Since cache is closer to the CPU, the time it takes to retrieve information is significantly lower compared to accessing it from RAM.

Lower Power Consumption: Accessing data from cache requires less power compared to fetching it from main memory. This can contribute to overall energy efficiency in a computing system.

Cost-Effective: Cache memory is smaller in size compared to main memory, so it is less expensive to manufacture. This makes it a cost-effective solution for improving system performance without requiring a significant increase in hardware costs.

What is Cache Memory Disadvantages

Limited Capacity: Compared to main memory, the capacity of cache memory is limited. It cannot store as much data, so there is always a risk of cache overflow, where data that is needed is not present in the cache.

Expensive: Despite being smaller than main memory, cache memory is more expensive per unit of storage. This cost can be a limiting factor when designing systems with larger cache sizes.

Complex Management: Managing cache memory involves sophisticated algorithms and hardware mechanisms. It adds complexity to the design of computer systems, and improper management can lead to performance issues.

Consistency Issues: Cache introduces the possibility of data inconsistency. If the data in the main memory is modified and the corresponding data in the cache is not updated, it can lead to discrepancies in the information used by the CPU.

Heat Generation: Constantly accessing and updating cache memory can generate additional heat in the system. While this might not be a significant issue in small-scale systems, it can contribute to heat-related challenges in high-performance computing environments.